Kubernets---高可用安装

1.修改主机名

如何使用hostnamectl set-hostname name来为每台主机设置不同的机器名

#hostnamectl set-hostname k8s-master01

或者使用以下方式对/etc/hosts写入

cat >> /etc/hosts << EOF192.168.80.10 k8s-master01192.168.80.20 k8s-master02192.168.80.30 k8s-master03192.168.80.40 k8s-node01192.168.80.50 k8s-node02192.168.80.100 lbvip EOF

2.安装依赖包

yum -y install conntrack ntpdate ntp ipvsadm ipset jq iptables curl vim sysstat libseccomp wget lrzsz net-tools git

3.设置防火墙为iptables并设置空规则

systemctl stop firewalld && systemctl disable firewalld yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save

4.对升级的软件包等更新最新

yum update -y

5.关闭SELINUX

swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

6.调整内核参数,对于K8S

modprobe br_netfilter cat > kubernetes.conf << EOFnet.bridge.bridge-nf-call-iptables=1net.bridge.bridge-nf-call-ip6tables=1net.ipv4.ip_forward=1net.ipv4.tcp_tw_recycle=0vm.swappiness=0 #禁止使用swap空间,只有当系统OOM时才允许使用它vm.overcommit_memory=1 #不检查物理内存是否够用vm.panic_on_oom=0 #开启OOMfs.inotify.max_user_instances=8192fs.inotify.max_user_watches=1048576fs.file-max=52706963fs.nr_open=52706963net.ipv6.conf.all.disable_ipv6=1net.netfilter.nf_conntrack_max=2310720EOFcp kubernetes.conf /etc/sysctl.d/kubernetes.confsysctl -p /etc/sysctl.d/kubernetes.conf

7.调整时区

# 设置系统时区为 中国/上海 timedatectl set-timezone Asia/Shanghai# 将当前的UTC时间写入硬件时钟 timedatectl set-local-rtc 0# 重启依赖于系统时间的服务 systemctl restart rsyslog && systemctl restart crond

8.关闭不必要的服务

#CentOS7下,CentOS8.1不需要 systemctl stop postfix && systemctl disable postfix

9.设置rsyslogd和systemd journald

mkdir /var/log/journal #持久化保存日志的目录mkdir /etc/systemd/journald.conf.d cat > /etc/systemd/journald.conf.d/99-prophet.conf << EOF [Journal]# 持久化保存到磁盘Storage=persistent# 压缩历史日志Compress=yes SyncIntervalSec=5m RateLimitInterval=30s RateLimitBurst=1000# 最大占用空间SystemMaxUse=10G# 单日志文件最大 200MSystemMaxFileSize=200M# 日志保存时间 2周MaxRetentionSec=2week# 不将日志转发到syslogForwardToSyslog=no EOF systemctl restart systemd-journald

10.升级到最新版本的内核

如果是CentOS7.x系统自带的3.10.x内存存在一些Bugs,导致运行的Docker、Kubernetes不稳定,建议 升级到4.4.x内核及以上的内核rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.orgyum -y install https://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm# 安装完成后检查/boot/grub2/grub.cfg中对应内核menuentry中是否包含initrd16配置,如果没有, 再安装一次! yum --enablerepo=elrepo-kernel install -y kernel-lt# 设置开机从新内核启动 grub2-set-default 'CentOS Linux (4.4.215-1.el7.elrepo.x86_64) 7 (Core)' #重新启动系统 reboot

11.关闭NUMA

cp /etc/default/grub{,.bak}vim /etc/default/grub #在GRUB_CMDLINE_LINUX一行添加'numa=off'参数,如下所示: GRUB_CMDLINE_LINUX="crashkernel=auto spectre_v2=retpoline rhgb quiet numa=off"12.Kube-proxy开启ipvs的前置条件

cat > /etc/sysconfig/modules/ipvs.modules << EOF#!/bin/bashmodprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4#重启系统reboot

13.安装软件

#安装dockeryum install -y yum-utils device-mapper-persistent-data lvm2

cd /etc/yum.repos.d

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo# 创建/etc/docker目录mkdir /etc/docker#最后/etc/docker/daemon.json文件的内容如下:cat > /etc/docker/daemon.json << EOF

{ "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m"

}, "insecure-registries": ["https://hub.mfyxw.com"], "registry-mirrors": ["https://jltw059v.mirror.aliyuncs.com"]

}

EOF#列出docker-ce版本yum list docker-ce --showduplicates | sort -r

yum install -y docker-ce

yum install -y docker-ce-18.06.3.ce-3.el7

mkdir -p /etc/systemd/system/docker.servicd.d#重启docker服务systemctl daemon-reload && systemctl restart docker && systemctl enable docker14.安装haproxy和keepalived软件

yum -y install haproxy keepalived

15.添加haproxy配置文件内容

#在对配置文件修改前先做好原配置文件的备份

mv /etc/haproxy/haproxy.cfg{,.bak}

#对原来的配置文件内容修改如下

cat > /etc/haproxy/haproxy.cfg << EOF

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults log global

mode http option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http option httplog

monitor-uri /monitor

listen stats

bind *:8006

mode http

stats enable

stats hide-version

stats uri /stats

stats refresh 30s

stats realm Haproxy\ Statistics

stats auth admin:admin

frontend k8s-master

bind 0.0.0.0:58443 #此端口地址建议修改大点,以免和prometheu+grafana的端口产生冲突

bind 127.0.0.1:58443 #此端口地址建议修改大点,以免和prometheu+grafana的端口产生冲突

mode tcp option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp option tcplog option tcp-check

balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

#如下内容的主机名和IP地址请按你实际环境的来填写 server k8s-master01 192.168.80.10:6443 check

server k8s-master02 192.168.80.20:6443 check server k8s-master03 192.168.80.30:6443 check

EOF

#把修改好的haproxy.cfg文件分别复制到另外二个master节点的/etc/haproxy/目录下

scp -r /etc/haproxy/haproxy.cfg k8s-master01:/etc/haproxy/

scp -r /etc/haproxy/haproxy.cfg k8s-master02:/etc/haproxy/

scp -r /etc/haproxy/haproxy.cfg k8s-master03:/etc/haproxy/16.添加keepalived配置文件

#对原有的keepalived配置文件做备份mv /etc/keepalived/keepalived.conf{,.bak}#直接复制如下内容到shell中回车即可(已经重新对keepalived.conf文件修改)cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 2

weight -5

fall 3

rise 2}

vrrp_instance VI_1 {

state MASTER

interface ens33

mcast_src_ip 192.168.80.10 #此处请填写相对应的本地的IP地址,IP不能相同,每个master节点的请另行修改

virtual_router_id 51

priority 102 #优先级高的能优先获得vip地址,优先级不能相同,每个master节点的请另行修改

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

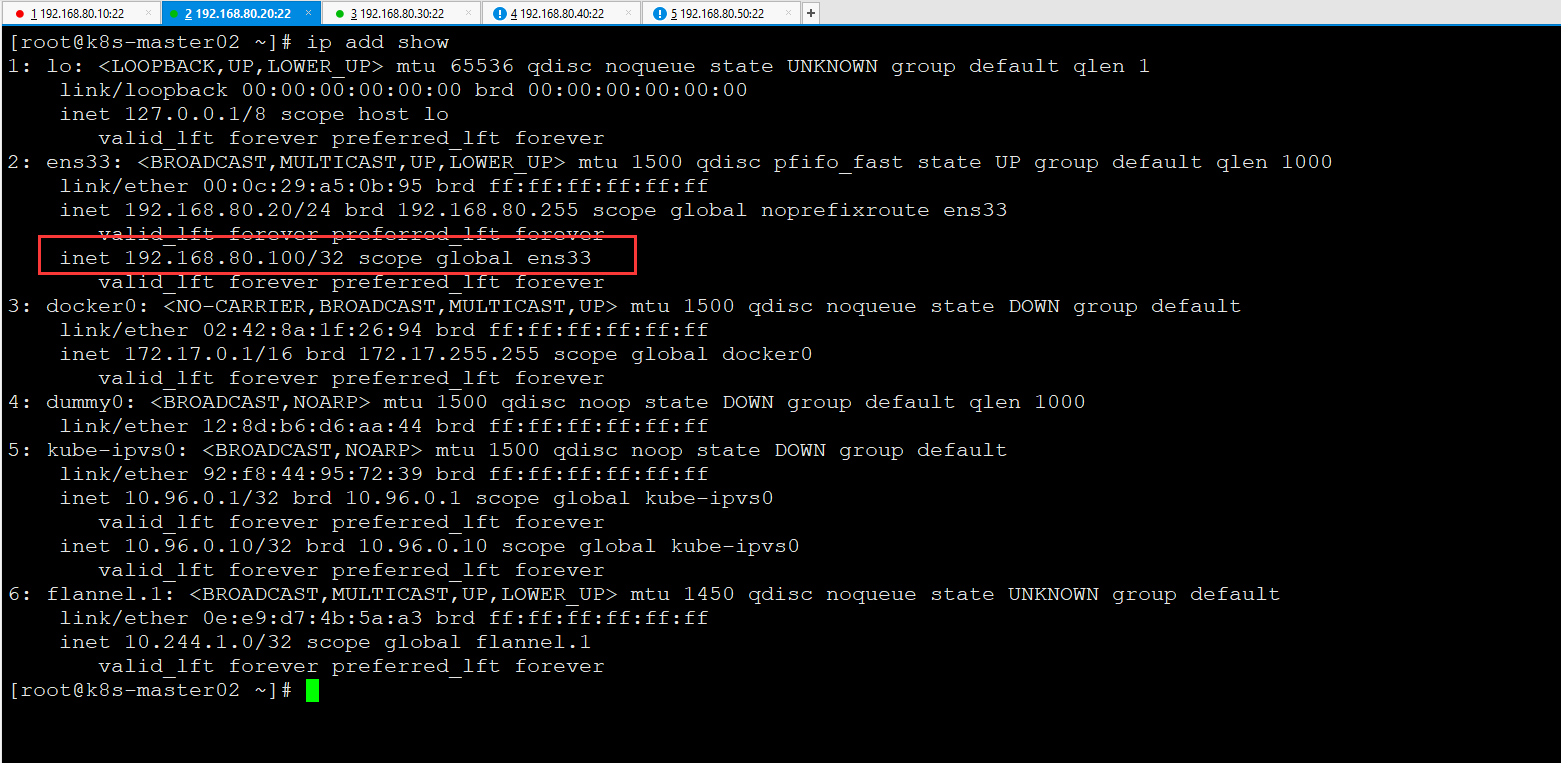

virtual_ipaddress { 192.168.80.100 #此处填写你要设定的VIP地址

}# track_script {# chk_apiserver# }}

EOF#复制keepalived.conf文件到另外二个master节点,复制过去后请自动修改IP地址和优先级,VIP地址不需要修改scp -r /etc/keepalived/keepalived.conf k8s-master02:/etc/keepalived/scp -r /etc/keepalived/keepalived.conf k8s-master03:/etc/keepalived/17.为keepalived添加检测脚本

cat > /etc/keepalived/check_apiserver.sh << EOF#!/bin/bashfunction check_apiserver() { for ((i=0;i<5;i++));do

apiserver_job_id=$(pgrep kube-apiserver) if [[ ! -z $apiserver_job_id ]];then

return

else

sleep 2 fi

apiserver_job_id=0 done}# 1: running 0: stoppedcheck_apiserverif [[ $apiserver_job_id -eq 0 ]]; then

/usr/bin/systemctl stop keepalived exit 1else

exit 0fiEOF#对检测脚本添加执行权限chmod a+x /etc/keepalived/check_apiserver.sh#把检测脚本分别复制到另外的master节点对应的/etc/keepalived目录下scp -r /etc/keepalived/check_apiserver.sh k8s-master02:/etc/keepalived/

scp -r /etc/keepalived/check_apiserver.sh k8s-master03:/etc/keepalived/18.把haproxy和keepalived服务启动

systemctl enable --now haproxy && systemctl enable --now keepalived#查看haproxy和keepalived的服务状态systemctl status haproxy keepalived

19.安装kubeadm主从

#所有节点都需要导入yum源(包括master和node)cat << EOF > /etc/yum.repos.d/kubernetes.repo [Kubernetes] name=Kubernetes repo baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enable=1 gpgcheck=1 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF#安装kubeadm,kubectl kubelet(包括master和node节点)yum -y install kubeadm-1.17.1 kubectl-1.17.1 kubelet-1.17.1#也可以直接安装最新版本的yum -y install kubeadm kubectl kubelet#设置kubelet开机自启(包括master和node节点)systemctl enable kubelet.service

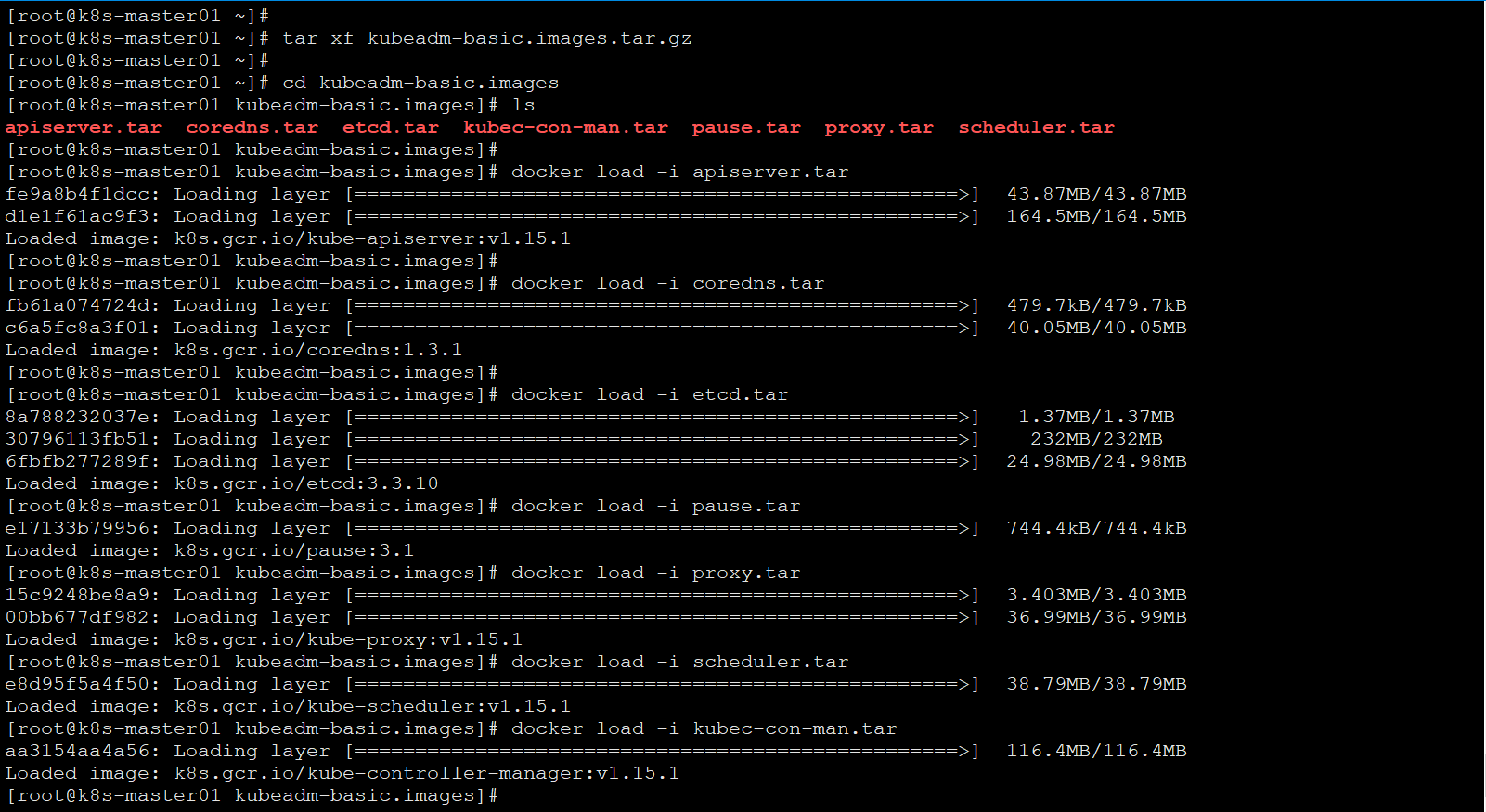

20.导入所需要的镜像

#将下载的镜像包上传到master节点并解压导入(原需要的镜像需要科学地上网下载才行,故都是通过把所需要的镜像自行去阿里找并pull回来打包)#Master节点:把打包的镜像kubeadm-basic.images.tar.gz上传到master节点解压tar xf kubeadm-basic.images.tar.gz cd kubeadm-basix.images#通过命令把镜像导入到master节点,也可以写成脚本进行导入#手动导入方法docker load -i apiserver_1.17.1.tar && docker load -i etcd_3.4.3-0.tar && docker load -i kube-con-manager_1.17.1.tar && docker load -i proxy_1.17.1.tar && docker load -i coredns_1.6.5.tar && docker load -i flannel.tar && docker load -i pause_3.1.tar && docker load -i scheduler_1.17.1.tar#Node节点:把打包的镜像kubeadm-basic.images.tar.gz上传到master节点解压tar xf kubeadm-basic.images.tar.gz cd kubeadm-basix.images#通过命令把镜像导入到master节点,也可以写成脚本进行导入#手动导入方法docker load -i coredns_1.6.5.tar && docker load -i flannel.tar && docker load -i pause_3.1.tar && docker load -i proxy_1.17.1.tar #脚本导入方法cat > /root/import_image.sh << EOF ls /root/kubeadm-base.images > /tmp/images-list.txt cd /root/kubeadm-base.imagesfor i in $( cat /tmp/images-list.txt )do docker load -i $idone rm -fr /tmp/images-list.txt EOF#给脚本添加可执行权限chmod a+x /root/import_image.sh#执行脚本 bash /root/import_image.sh

21.生成k8s初始化配置文件

#在获得vip的master节点上生成创始化配置文件kubeadm config print init-defaults > kubeadm-init.yaml

#出现如下二段文字,忽略(1.17版本会生成默认配置文件会提示)

W0309 10:29:42.003091 2724 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0309 10:29:42.004138 2724 validation.go:28] Cannot validate kubelet config - no validator is available

#对生成的kubeadm-init.yaml文件进行修改apiVersion: kubeadm.k8s.io/v1beta2bootstrapTokens:- groups:apiVersion: kubeadm.k8s.io/v1beta2bootstrapTokens:- groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authenticationkind: InitConfigurationlocalAPIEndpoint: advertiseAddress: 192.168.80.10 #当前master主机的IP地址,请自行修改 bindPort: 6443nodeRegistration: criSocket: /var/run/dockershim.sock name: lbvip taints: - effect: NoSchedule key: node-role.kubernetes.io/master---apiServer: timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta2certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrolPlaneEndpoint: "192.168.80.100:58443" #此项如没有请自行添加,此处请填写你的高可用的VIP地址及端口controllerManager: {}dns: type: CoreDNSetcd: local: dataDir: /var/lib/etcdimageRepository: k8s.gcr.io #默认会从k8s.gcr.io下载镜像,需要科 学地上 网,此处可以修改为阿里云镜像的地址#imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #此处的地址为阿里云镜像地址kind: ClusterConfigurationkubernetesVersion: v1.15.1 #版本需要修改为你下载的镜像版本,请自行查阅需要的版本networking: dnsDomain: cluster.local podSubnet: 10.244.0.0/16 #此处需要添加pod网络,需要与flanel的yaml上的网段一致,如不一致,请修改 serviceSubnet: 10.96.0.0/12scheduler: {}#在scheduler:{}后面添加如下内容(开启ipvs,如果没有开启ipvs会默认降级为iptables):---apiVersion: kubeproxy.config.k8s.io/v1alpha1kind: KubeProxyConfigurationfeatureGates: SupportIPVSProxyMode: truemode: ipvs#温馨提示:如果不能通过科学地上网,建议修改为阿里云镜像地址进入下载,请自行查询需要安装哪个版本的如果没有导入镜像而去网上拉取镜像,可以先使用配置文件进行把镜像拉取下来

kubeadm config images pull --config kubeadm-init.yaml

22.在初始化k8s之前需要确定haproxy和keepalived的服务是否正常

#检查获得vip地址的master节点的haproxy和keepalived的服务是否正常systemctl status haproxy keepalived

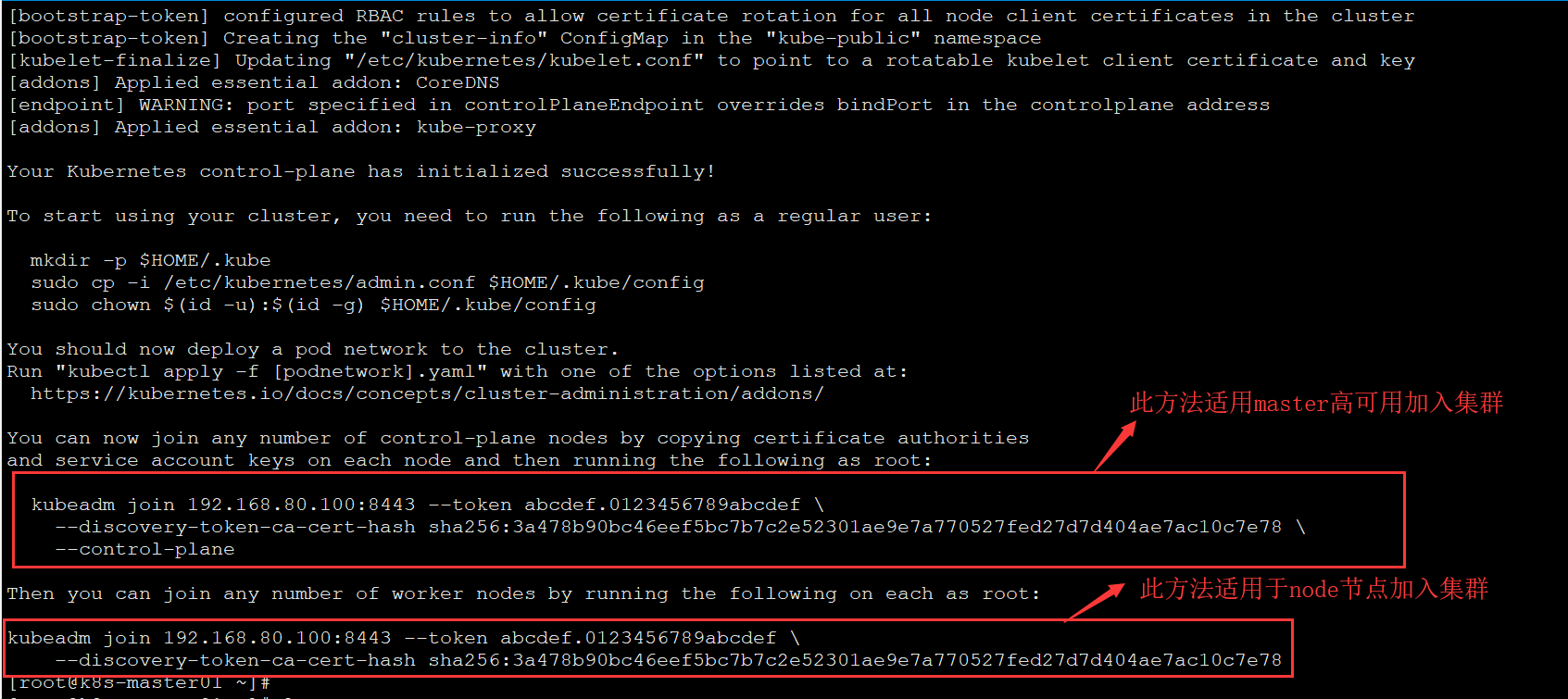

23.使用配置文件初始化集群

#在获得vip的master节点上使用配置文件创始化集群并把日志输入到log文件保存kubeadm init --config kubeadm-init.yaml | tee kubeadm-init.log

把主master节点的证书都复制到其它二个master节点,编写了一个sh程序来复制

#以下脚本,需要你做了免密登录才能使用cat > /root/cert.sh << EOF#!/bin/bashUSER=root

CONTROL_PLANE_IPS="k8s-master02 k8s-master03"for host in $CONTROL_PLANE_IPS; do

ssh "${USER}"@${host} "mkdir -p /etc/kubernetes/pki/etcd"

scp /etc/kubernetes/pki/{ca.crt,ca.key,sa.key,sa.pub,front-proxy-ca.crt,front-proxy-ca.key} "${USER}"@$host:/etc/kubernetes/pki

scp /etc/kubernetes/pki/etcd/{ca.key,ca.crt} "${USER}"@$host:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/admin.conf "${USER}"@$host:/etc/kubernetesdone

EOF

chmod a+x /root/cert.sh

bash /root/cert.sh#如未做免密登录的请参考如下步骤

#分别在k8s-master02和k8s-master03节点执行相同操作

mkdir -pv /etc/kubernetes/pki/etcd #把k8s-master01的证书复制到其它的master节点相对应的位置

scp /etc/kubernetes/pki/{ca.crt,ca.key,sa.key,sa.pub,front-proxy-ca.crt,front-proxy-ca.key} k8s-master02:/etc/kubernetes/pki

scp /etc/kubernetes/pki/{ca.crt,ca.key,sa.key,sa.pub,front-proxy-ca.crt,front-proxy-ca.key} k8s-master03:/etc/kubernetes/pki

scp /etc/kubernetes/pki/etcd/{ca.key,ca.crt} k8s-master02:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/pki/etcd/{ca.key,ca.crt} k8s-master03:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/admin.conf k8s-master02:/etc/kubernetes

scp /etc/kubernetes/admin.conf k8s-master03:/etc/kubernetes其它主节点加入集群,在使用节点加入的命令添加参数**

#--experimental-control-plane适用于1.14之前加入高可用master节点方案kubeadmin join vip:端口 --token **** --discovery-token-ca-cert-hash *** --experimental-control-plane#1.17的master节点高可用方案是如下:kubeadm join 192.168.80.100:8443 --token **** \ --discovery-token-ca-cert-hash **** \ --control-plane温馨提示:****请参与于自己master节点最后生成的

在master节点上运行如下命令,本示例是以root用户,建议使用其它用户

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

24.Node节点加入集群

#如下方法是Node节点加入集群kubeadm join 192.168.80.100:8443 --token **** \ --discovery-token-ca-cert-hash **** 温馨提示:****请参与于自己master节点最后生成的

25.安装flannel网络插件

#去github上的flannel下载好kube-flannel.yaml文件到有vip的主master节点wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml#推荐去阿里云镜像下载flannel回来后打标签docker pull registry.cn-hangzhou.aliyuncs.com/kube-iamges/flannel:v0.11.0-amd64 docker tag registry.cn-hangzhou.aliyuncs.com/kube-iamges/flannel:v0.11.0-amd64 quay.io/coreos/flannel:v0.11.0-amd64#通过docker save -o来保存镜像docker save -o flannel.tar quay.io/coreos/flannel:v0.11.0-amd64#把保存后的镜像传到其它的节点上scp -r flannel.tar k8s-master02:/root/ scp -r flannel.tar k8s-master03:/root/ scp -r flannel.tar k8s-node01:/root/ scp -r flannel.tar k8s-node02:/root/#把其它的节点上导入flannel镜像docker load -i flannel.tar#在获得的高可用vip的主master节点上运行kube-flannel.yamlkubectl apply -f kube-flannel.yaml

26.测试高可用性

#在master03主节点上运行如下命令,把master01主节点的网络断开while true; do sleep 1; kubectl get node;date; done#会有短暂的时间获取不到信息,之后就一直显示正常

目录 返回

首页