Kubernetes安装EFK教程(非存储持久化方式部署)

1.简介

这里所指的EFK是指:ElasticSearch,Fluentd,Kibana

ElasticSearch

Elasticsearch是一个基于Apache Lucene™的开源搜索和数据分析引擎引擎,Elasticsearch使用Java进行开发,并使用Lucene作为其核心实现所有索引和搜索的功能。它的目的是通过简单的RESTful API来隐藏Lucene的复杂性,从而让全文搜索变得简单。Elasticsearch不仅仅是Lucene和全文搜索,它还提供如下的能力: 分布式的实时文件存储,每个字段都被索引并可被搜索; 分布式的实时分析搜索引擎; 可以扩展到上百台服务器,处理PB级结构化或非结构化数据。 在Elasticsearch中,包含多个索引(Index),相应的每个索引可以包含多个类型(Type),这些不同的类型每个都可以存储多个文档(Document),每个文档又有多个属性。索引 (index) 类似于传统关系数据库中的一个数据库,是一个存储关系型文档的地方。Elasticsearch 使用的是标准的 RESTful API 和 JSON。此外,还构建和维护了很多其他语言的客户端,例如 Java, Python, .NET, 和 PHP。

Fluentd

Fluentd是一个开源数据收集器,通过它能对数据进行统一收集和消费,能够更好地使用和理解数据。Fluentd将数据结构化为JSON,从而能够统一处理日志数据,包括:收集、过滤、缓存和输出。Fluentd是一个基于插件体系的架构,包括输入插件、输出插件、过滤插件、解析插件、格式化插件、缓存插件和存储插件,通过插件可以扩展和更好的使用Fluentd。

Kibana

Kibana是一个开源的分析与可视化平台,被设计用于和Elasticsearch一起使用的。通过kibana可以搜索、查看和交互存放在Elasticsearch中的数据,利用各种不同的图表、表格和地图等,Kibana能够对数据进行分析与可视化

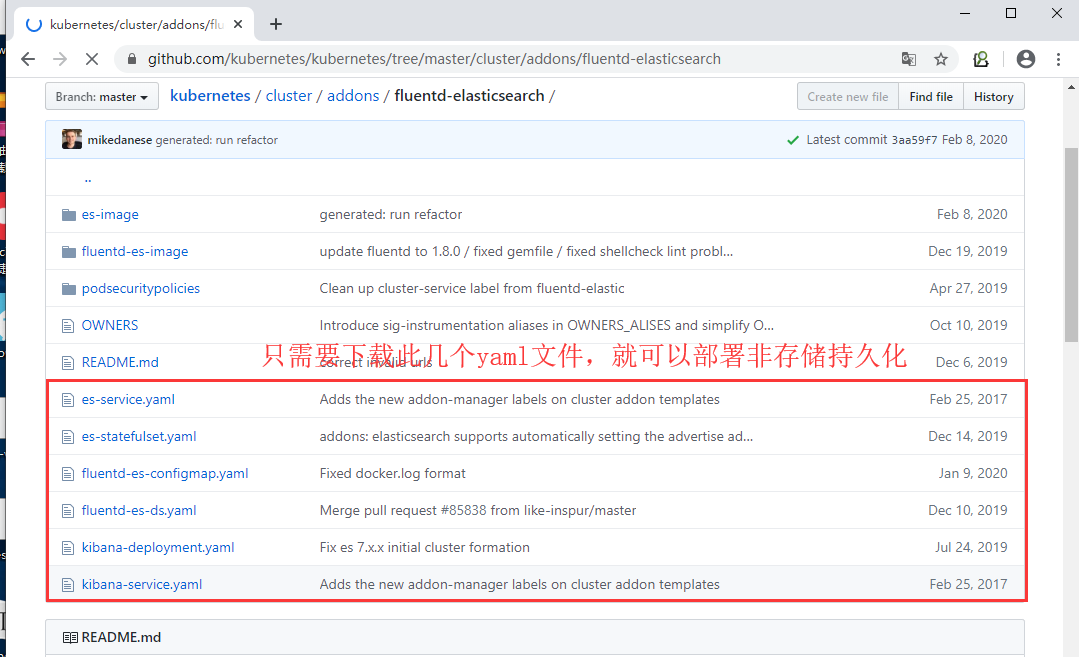

2.下载需要用到的EFK的yaml文件

kubernetes的github

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/fluentd-elasticsearch 温馨提示: 此github有非存储持久化方式部署,需要存储持久化请修改现有的yaml

下载连接

mdkir /root/EFK cd /root/EFK wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/fluentd-elasticsearch/es-service.yaml wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/fluentd-elasticsearch/es-statefulset.yaml wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/fluentd-elasticsearch/fluentd-es-configmap.yaml wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/fluentd-elasticsearch/fluentd-es-ds.yaml wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/fluentd-elasticsearch/kibana-deployment.yaml wget https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/fluentd-elasticsearch/kibana-service.yaml

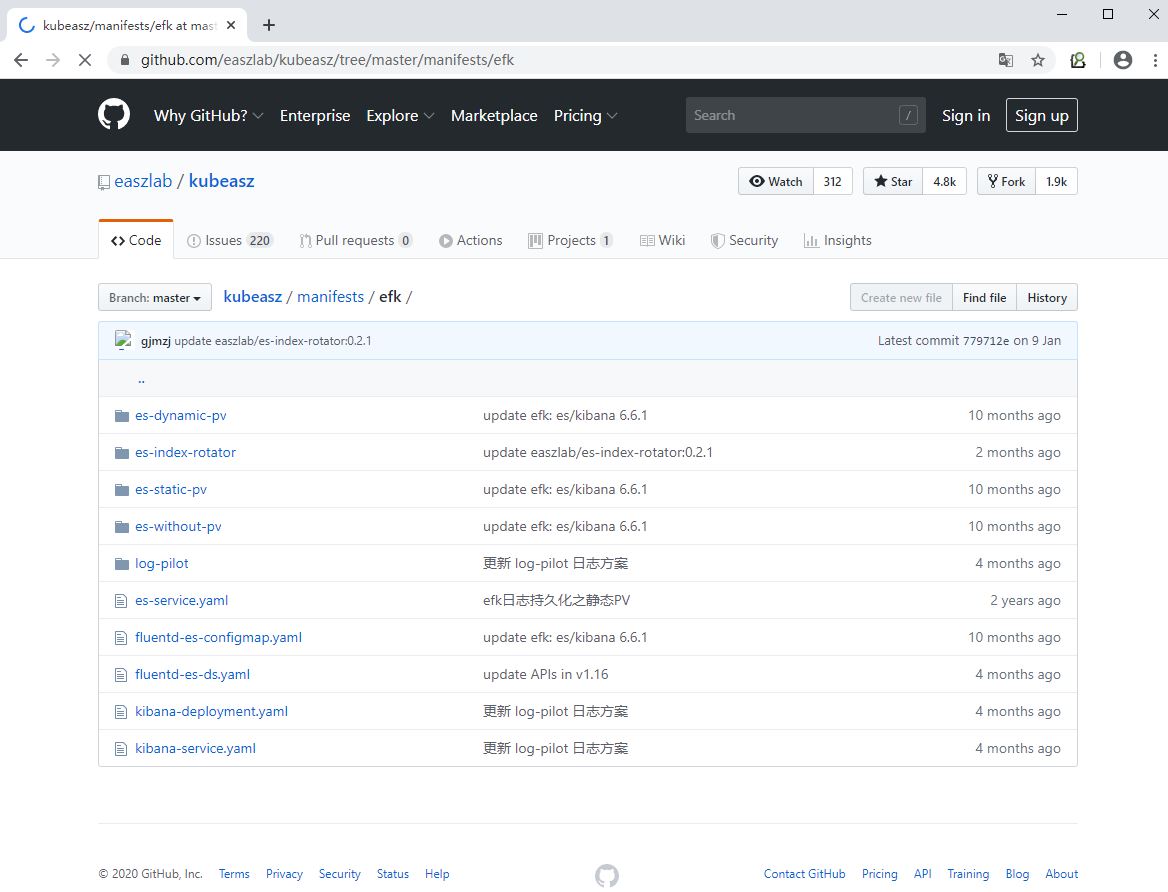

或者使用easzlab的也可以

https://github.com/easzlab/kubeasz/tree/master/manifests/efk 温馨提示: 此github,有非存储持久化部署方式,也有持久化部署方式。

下载连接地址:

wget https://raw.githubusercontent.com/easzlab/kubeasz/master/manifests/efk/es-without-pv/es-statefulset.yaml温馨提示:es-static-pv和es-dynamic-pv分别是静态pv和动太pv存储持久方案,如需要可参考,es-without-pv此文件夹是非存储持久方案 wget https://raw.githubusercontent.com/easzlab/kubeasz/master/manifests/efk/es-service.yamlwget https://raw.githubusercontent.com/easzlab/kubeasz/master/manifests/efk/fluentd-es-configmap.yamlwget https://raw.githubusercontent.com/easzlab/kubeasz/master/manifests/efk/fluentd-es-ds.yamlwget https://raw.githubusercontent.com/easzlab/kubeasz/master/manifests/efk/kibana-deployment.yamlwget https://raw.githubusercontent.com/easzlab/kubeasz/master/manifests/efk/kibana-service.yaml

3.下载EFK需要的镜像

yaml源文件需要的镜像及地址

elasticsearch:v7.4.2 quay.io/fluentd_elasticsearch/elasticsearch:v7.4.2fluentd:v2.8.0 quay.io/fluentd_elasticsearch/fluentd:v2.8.0kibana-oss:7.4.2 docker.elastic.co/kibana/kibana-oss:7.4.2温馨提示:因v7.4.2测试了几次都存在问题,elasticsearch一直重启报错,故更新为6.6.1elasticsearch:v6.6.1 quay.io/fluentd_elasticsearch/elasticsearch:v6.6.1fluentd-elasticsearch:v2.4.0 quay.io/fluentd_elasticsearch/fluentd_elasticsearch:v2.4.0kibana-oss:6.6.1 docker.elastic.co/kibana/kibana-oss:6.6.1

因不能上网,故在阿里云镜像上直接找到相对应的连接

elasticsearch:v6.6.1 registry.cn-hangzhou.aliyuncs.com/yfhub/elasticsearch:v6.6.1fluentd-elasticsearch:v2.4.0 registry.cn-hangzhou.aliyuncs.com/yfhub/fluentd-elasticsearch:v2.4.0kibana-oss:6.6.1 registry.cn-hangzhou.aliyuncs.com/yfhub/kibana-oss:6.6.1

使用docker pull把镜像拉下来

docker pull registry.cn-hangzhou.aliyuncs.com/yfhub/elasticsearch:6.6.1docker pull registry.cn-hangzhou.aliyuncs.com/yfhub/fluentd-elasticsearch:v2.4.0docker pull registry.cn-hangzhou.aliyuncs.com/yfhub/kibana-oss:6.6.1

把镜像打标签使之与yaml需要的一致

docker tag registry.cn-hangzhou.aliyuncs.com/yfhub/elasticsearch:6.6.1 quay.io/fluentd_elasticsearch/elasticsearch:v6.6.1docker tag registry.cn-hangzhou.aliyuncs.com/yfhub/fluentd-elasticsearch:v2.4.0 quay.io/fluentd_elasticsearch/fluentd_elasticsearch:v2.4.0docker tag registry.cn-hangzhou.aliyuncs.com/yfhub/kibana-oss:6.6.1 docker.elastic.co/kibana/kibana-oss:6.6.1

上传打标签前的节点

docker rmi registry.cn-hangzhou.aliyuncs.com/yfhub/elasticsearch:6.6.1docker rmi registry.cn-hangzhou.aliyuncs.com/yfhub/fluentd-elasticsearch:v2.4.0docker rmi registry.cn-hangzhou.aliyuncs.com/yfhub/kibana-oss:6.6.1

把镜像保存为tar,方便分发到其它的Node节点并导入

docker save -o elasticsearch-v6.6.1 quay.io/fluentd_elasticsearch/elasticsearch:v6.6.1docker save -o fluentd-elasticsearch-v2.4.0 quay.io/fluentd_elasticsearch/fluentd_elasticsearch:v2.4.0docker save -o kibana-oss-6.6.1 docker.elastic.co/kibana/kibana-oss:6.6.1

把打包的镜像传到其它节点

scp -r elasticsearch-v6.6.1 fluentd-elasticsearch-v2.4.0 kibana-oss-6.6.12 k8s-node02:/root/

**在Node02节点上导入镜像

docker load -i elasticsearch-v6.6.1 && docker load -i fluentd-elasticsearch-v2.4.0 && docker load -i kibana-oss-6.6.1

4.对kubernetes官方的EFK的yaml进行改动

es-service.yaml文件内容如下(温馨提示,带有叉的都是注释行,默认原文件可能是启用状态)

apiVersion: v1kind: Servicemetadata: name: elasticsearch-logging namespace: kube-system labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "Elasticsearch"spec: type: NodePort #通过NodePort暴露端口,以便通过elasticsearch-head来连接elasticsearch查看 ports: - port: 9200 protocol: TCP targetPort: db selector: k8s-app: elasticsearch-logging

es-statefulset.yaml文件内容如下(温馨提示,带有叉的都是注释行,默认原文件可能是启用状态)

# RBAC authn and authzapiVersion: v1kind: ServiceAccountmetadata: name: elasticsearch-logging namespace: kube-system labels: k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true" #此行是新添加

addonmanager.kubernetes.io/mode: Reconcile---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata: name: elasticsearch-logging labels: k8s-app: elasticsearch-logging

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true" #此行是新添加rules:- apiGroups: - "" resources: - "services" - "namespaces" - "endpoints" verbs: - "get"---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata: namespace: kube-system name: elasticsearch-logging labels: k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true" #此行是新添加

addonmanager.kubernetes.io/mode: Reconcilesubjects:- kind: ServiceAccount name: elasticsearch-logging namespace: kube-system apiGroup: ""roleRef: kind: ClusterRole name: elasticsearch-logging apiGroup: ""---# Elasticsearch deployment itselfapiVersion: apps/v1kind: StatefulSetmetadata: name: elasticsearch-logging namespace: kube-system labels: k8s-app: elasticsearch-logging version: v6.6.1

kubernetes.io/cluster-service: "true" #此行是新添加

addonmanager.kubernetes.io/mode: Reconcilespec: serviceName: elasticsearch-logging replicas: 2 selector: matchLabels: k8s-app: elasticsearch-logging version: v6.6.1 template: metadata: labels: k8s-app: elasticsearch-logging version: v6.6.1

kubernetes.io/cluster-service: "true" #此行是新添加 spec: serviceAccountName: elasticsearch-logging containers: - image: quay.io/fluentd_elasticsearch/elasticsearch:v6.6.1 name: elasticsearch-logging imagePullPolicy: IfNotPresent #默认为Always,修改为IfNotPresent resources:

# need more cpu upon initialization, therefore burstable class limits: cpu: 1000m

# memory: 3Gi requests: cpu: 100m

# memory: 3Gi ports: - containerPort: 9200 name: db protocol: TCP - containerPort: 9300 name: transport protocol: TCP

# livenessProbe:

# tcpSocket:

# port: transport

# initialDelaySeconds: 5

# timeoutSeconds: 10

# readinessProbe:

# tcpSocket:

# port: transport

# initialDelaySeconds: 5

# timeoutSeconds: 10 volumeMounts: - name: elasticsearch-logging mountPath: /data env: - name: "NAMESPACE" valueFrom: fieldRef: fieldPath: metadata.namespace volumes: - name: elasticsearch-logging emptyDir: {}

# Elasticsearch requires vm.max_map_count to be at least 262144.

# If your OS already sets up this number to a higher value, feel free

# to remove this init container. initContainers: - image: alpine:3.6 command: ["/sbin/sysctl", "-w", "vm.max_map_count=262144"] name: elasticsearch-logging-init securityContext: privileged: truefluentd-es-ds.yaml文件内容如下,fluentd-es-configmap.yaml文件内容保持不变(温馨提示,带有叉的都是注释行,默认原文件可能是启用状态)

apiVersion: v1kind: ServiceAccountmetadata: name: fluentd-es namespace: kube-system labels: k8s-app: fluentd-es addonmanager.kubernetes.io/mode: Reconcile---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata: name: fluentd-es labels: k8s-app: fluentd-es addonmanager.kubernetes.io/mode: Reconcilerules:- apiGroups: - "" resources: - "namespaces" - "pods" verbs: - "get" - "watch" - "list"---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata: name: fluentd-es labels: k8s-app: fluentd-es addonmanager.kubernetes.io/mode: Reconcilesubjects:- kind: ServiceAccount name: fluentd-es namespace: kube-system apiGroup: ""roleRef: kind: ClusterRole name: fluentd-es apiGroup: ""---apiVersion: apps/v1kind: DaemonSetmetadata: name: fluentd-es-v2.4.0 namespace: kube-system labels: k8s-app: fluentd-es version: v2.4.0 addonmanager.kubernetes.io/mode: Reconcilespec: selector: matchLabels: k8s-app: fluentd-es version: v2.4.0 template: metadata: labels: k8s-app: fluentd-es version: v2.4.0 # This annotation ensures that fluentd does not get evicted if the node # supports critical pod annotation based priority scheme. # Note that this does not guarantee admission on the nodes (#40573). annotations: seccomp.security.alpha.kubernetes.io/pod: 'docker/default' spec: priorityClassName: system-node-critical serviceAccountName: fluentd-es containers: - name: fluentd-es image: quay.io/fluentd_elasticsearch/fluentd_elasticsearch:v2.4.0 #镜像地址一定记得修改 env: - name: FLUENTD_ARGS value: --no-supervisor -q resources: limits: memory: 500Mi requests: cpu: 100m memory: 200Mi volumeMounts: - name: varlog mountPath: /var/log - name: varlibdockercontainers mountPath: /var/lib/docker/containers readOnly: true - name: config-volume mountPath: /etc/fluent/config.d # ports: # - containerPort: 24231 # name: prometheus # protocol: TCP #livenessProbe: # tcpSocket: # port: prometheus # initialDelaySeconds: 5 # timeoutSeconds: 10 #readinessProbe: # tcpSocket: # port: prometheus # initialDelaySeconds: 5 # timeoutSeconds: 10 terminationGracePeriodSeconds: 30 volumes: - name: varlog hostPath: path: /var/log - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers - name: config-volume configMap: name: fluentd-es-config-v0.2.0

kibana-deployment.yaml文件内容如下(温馨提示,带有叉的都是注释行,默认原文件可能是启用状态)

apiVersion: apps/v1kind: Deploymentmetadata: name: kibana-logging namespace: kube-system labels: k8s-app: kibana-logging addonmanager.kubernetes.io/mode: Reconcilespec: replicas: 1 selector: matchLabels: k8s-app: kibana-logging template: metadata: labels: k8s-app: kibana-logging annotations: seccomp.security.alpha.kubernetes.io/pod: 'docker/default' spec: containers: - name: kibana-logging image: docker.elastic.co/kibana/kibana-oss:6.6.1 #镜像连接地址 resources: # need more cpu upon initialization, therefore burstable class limits: cpu: 1000m requests: cpu: 100m env: #- name: ELASTICSEARCH_HOSTS - name: ELASTICSEARCH_URL value: http://elasticsearch-logging:9200 #- name: SERVER_NAME # value: kibana-logging - name: SERVER_BASEPATH value: "" #kibana是通过nodeport方式进行访问,请把value的值改为此 #value: /api/v1/namespaces/kube-system/services/kibana-logging/proxy # - name: SERVER_REWRITEBASEPATH # value: "false" ports: - containerPort: 5601 name: ui protocol: TCP #livenessProbe: #livenessProbe和readinessProbe检测可以注释,不需要启用 # httpGet: # path: /api/status # port: ui # initialDelaySeconds: 5 # timeoutSeconds: 10 #readinessProbe: #httpGet: # path: /api/status # port: ui #initialDelaySeconds: 5 #timeoutSeconds: 10

kibana-service.yaml文件内容如下(温馨提示,带有叉的都是注释行,默认原文件可能是启用状态)

apiVersion: v1kind: Servicemetadata: name: kibana-logging namespace: kube-system labels: k8s-app: kibana-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "Kibana"spec: type: NodePort #添加此选项,以便能直接通过IP:端口的方式访问kibana ports: - port: 5601 protocol: TCP targetPort: ui selector: k8s-app: kibana-logging

5.应用EFK的yaml所有文件,我把EFK需要的所有文件都保存到一个文件夹/root/EFK

kubectl apply -f /root/EFK/

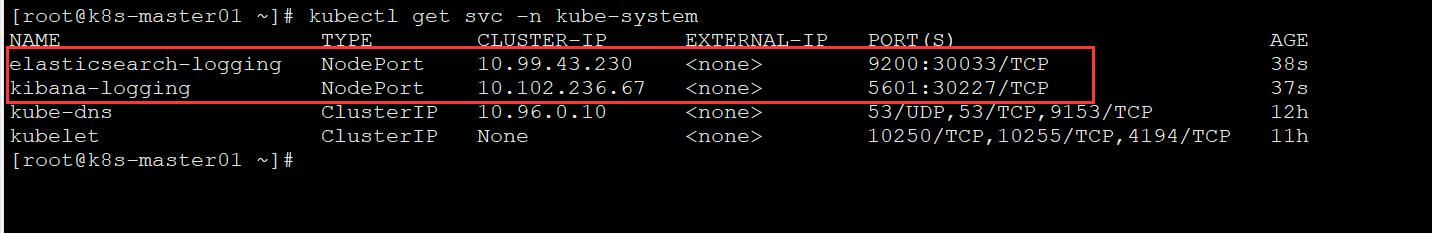

6.查看svc暴露的端口

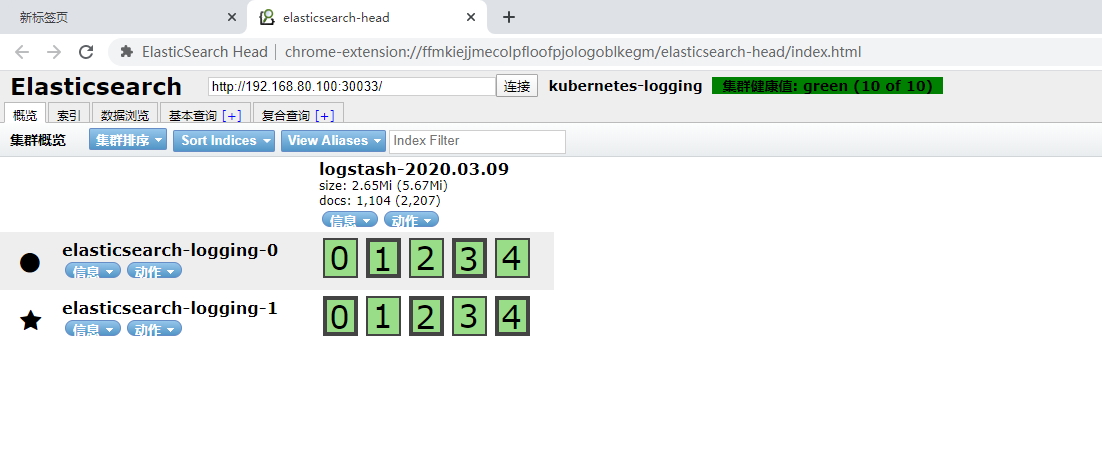

7.可以在谷歌浏览器安装elastisearch-head连接并查看

可以在谷歌浏览器安装elastisearch-head连接并查看elasticsearch是否能正常连接上或有没有报错等之类

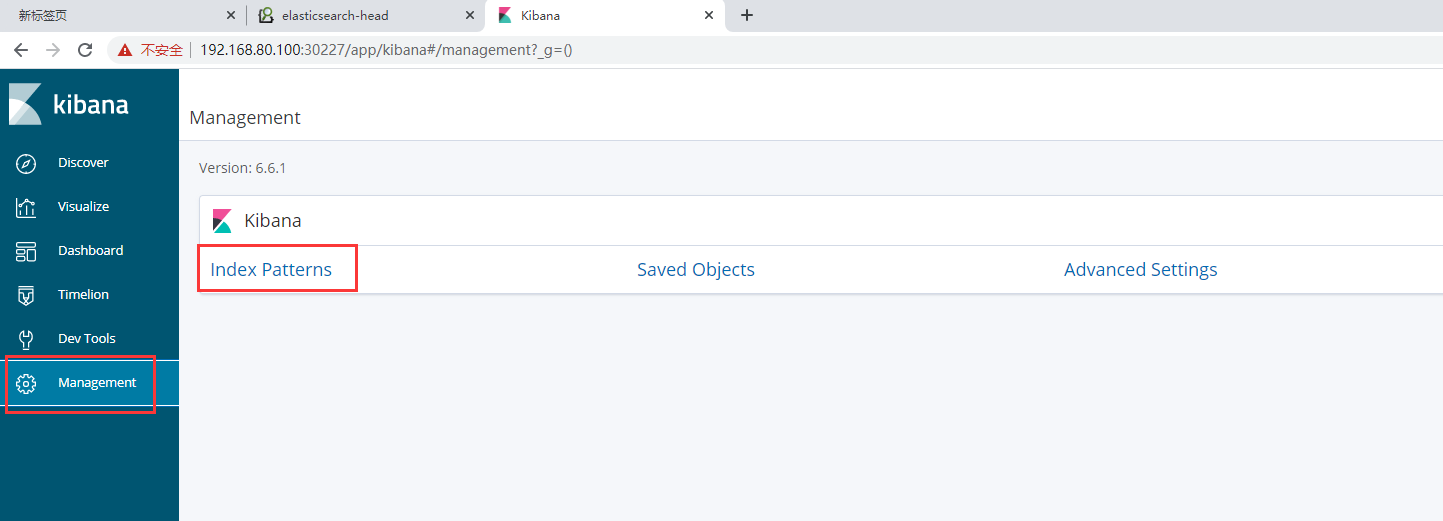

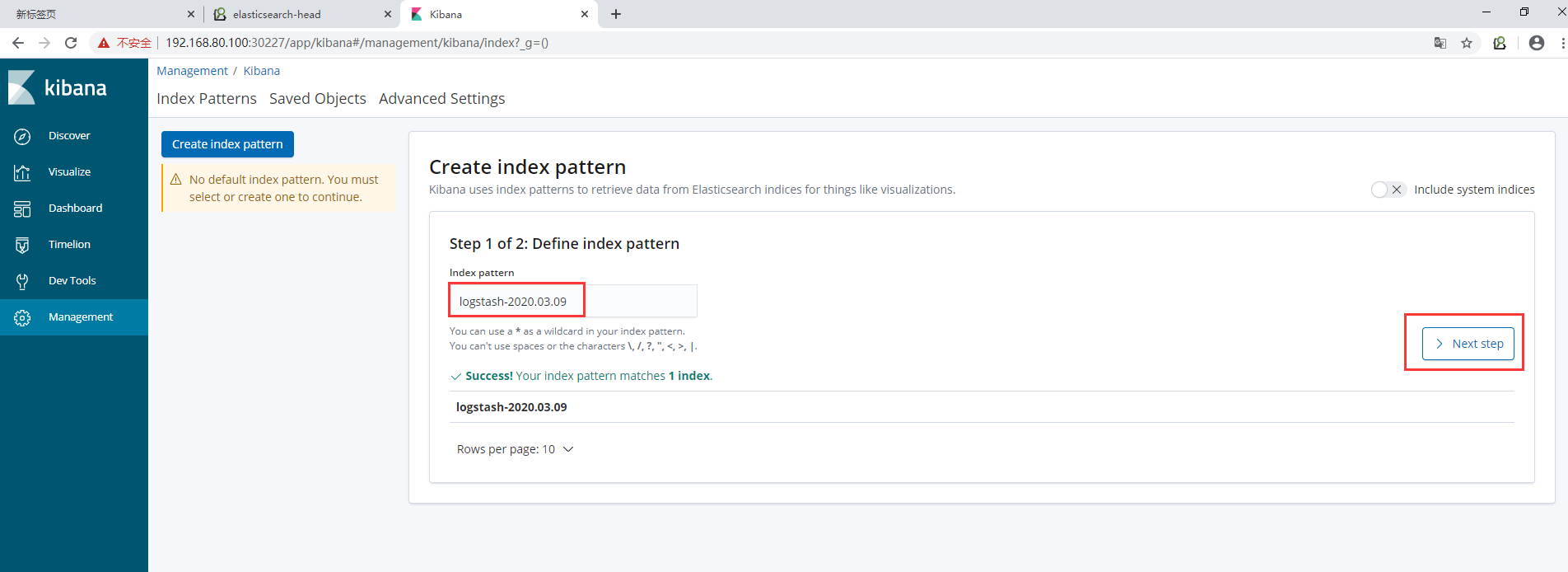

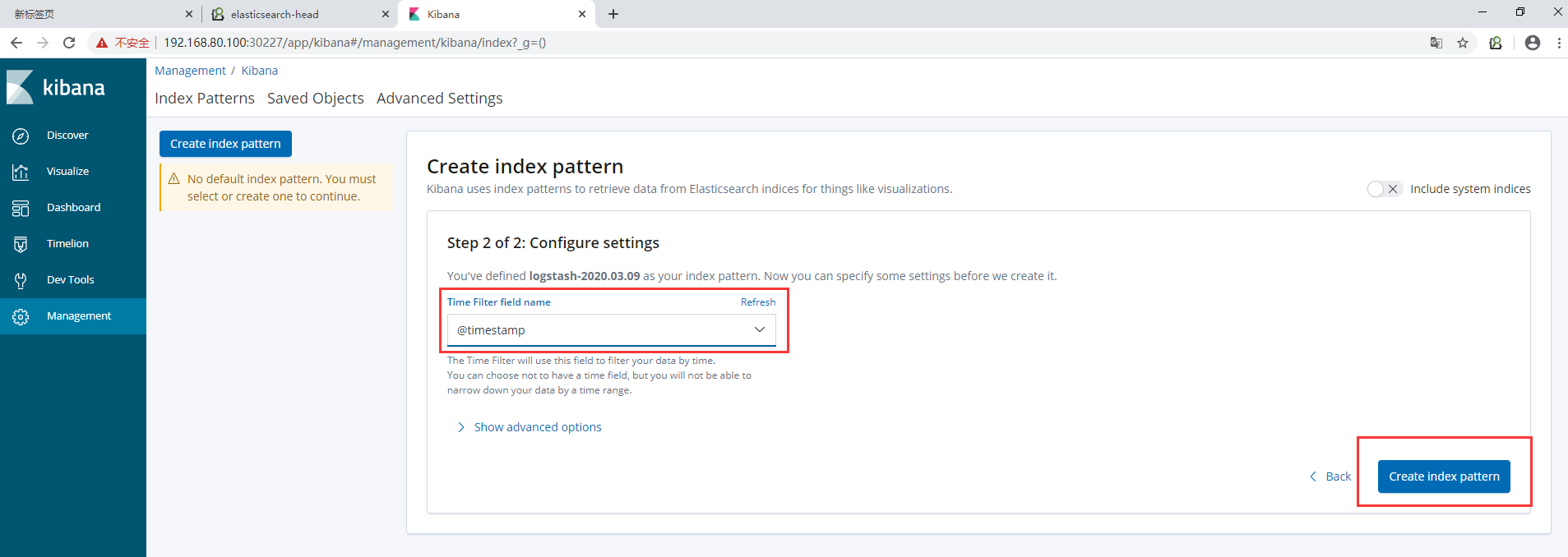

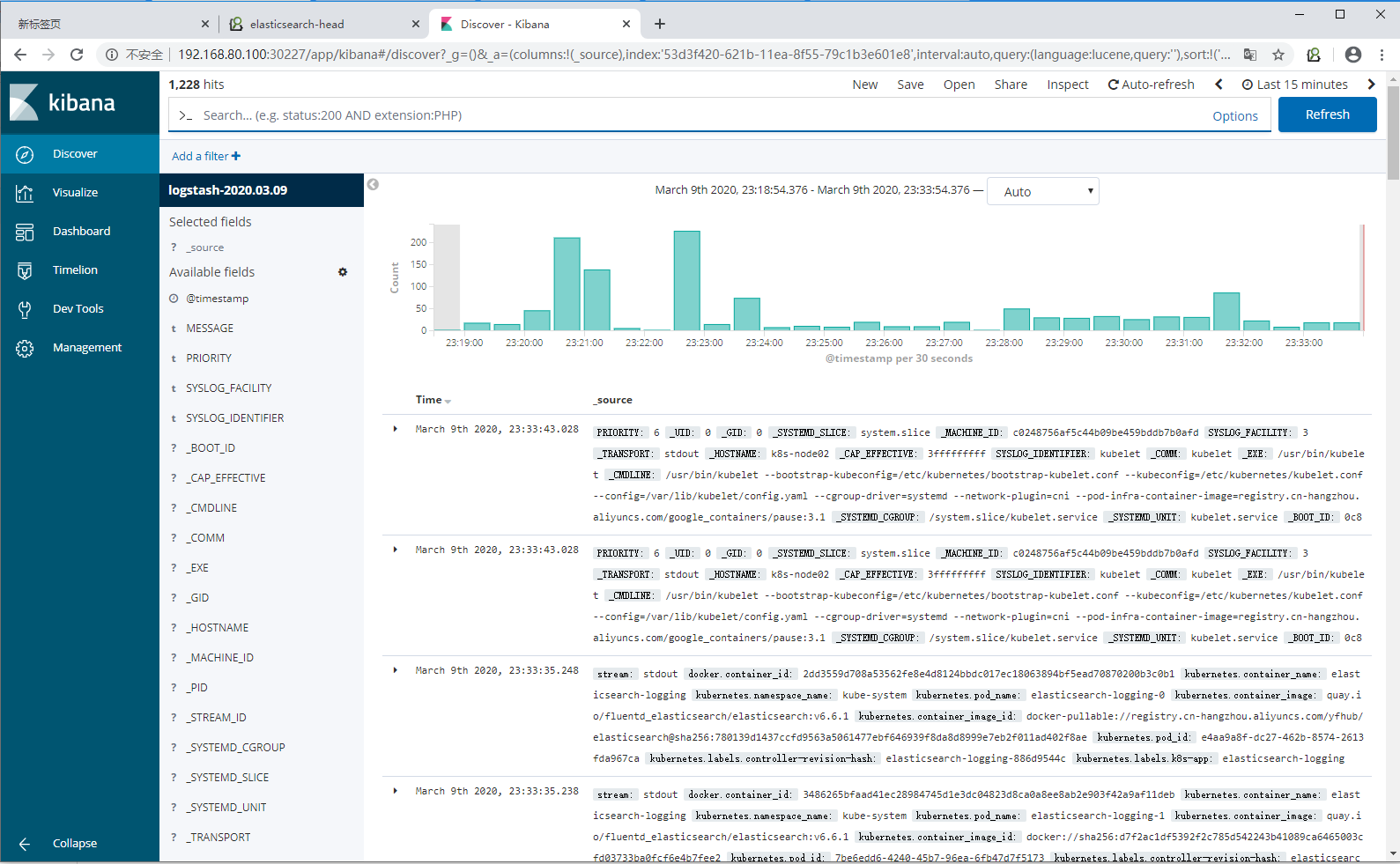

8.通过NodePort暴露kibana的service端口来访问kibana

目录 返回

首页